Cameras and LiDAR sensors are known to fail in industrial areas with potentially harsh circumstances such as fog, dust, dirt, rain, etc. However, 3D imaging sonar sensors that use ultrasonic sound rather than light will continue to operate. For specific industries, such as heavy manufacturing, mining, and agriculture, in-air sonar sensors are excellent for allowing autonomous systems to operate even in adverse situations.

Making everything work in real-time, without any delay in terms of processing the raw sonar signals and the navigation algorithms themselves, is difficult to achieve, as is often the case during sensor development. One of the major challenges is to convert sonar signals into 3D images of the environment in real-time.

Looking to Nature for Inspiration

Cosys-Lab at the University of Antwerp was founded with the goal of pushing the boundaries of cyber-physical systems such as sensors and their low and high-level applications. The organization is best known for its advanced in-air 3D imaging sonar sensors, which are inspired by bats and are developed in-house. Bats use echolocation to find their way around by emitting sounds and listening to the echoes picked up by their ears. It’s a brilliant way of perceiving the world that works wonderfully.

The team wanted to use their in-house developed 3D imaging sonar sensors as the input to a navigation algorithm inspired by bats and how they see the world around them using echolocation while flying, which would run in real-time on real-life mobile robots like Husky UGV. Their algorithm is based on the fusion of various sonar sensors into a single representation of the environment around the robot, as well as predefined marked areas or zones around it, to allow safe steering and movement behaviour when an operator is present or when the mobile vehicle is completely autonomous. Most importantly, the system was designed to be modular, allowing you to position the sonar sensors wherever you want on the mobile robot. In comparison to previous, similar research, this makes it much more practical for real-world industrial use-cases.

How Can Echolocation Help Robots “See”?

Husky UGV was used for the project as the HUB of the team’s sensor network and signal processing pipeline, as well as the mobile robot used for autonomous navigation. For validation, the team used three of their own 3D sonar sensors called eRTIS and an Ouster OS0-128 LiDAR. The Ouster LiDAR is a high-end sensor with a wide field of view that allowed them to generate an accurate map of the environment during the validation of the experiments with the eRTIS sensors. As a result, whenever the team’s synchronized 3D imaging sonar sensors generate a new set of measurements, their internal GPU embedded systems would execute the digital signal processing pipeline to calculate the 3D images of the environment structure. These images would be received by the Husky UGV’s internal PC via the Ethernet network. This information is then fed into their navigation algorithm.

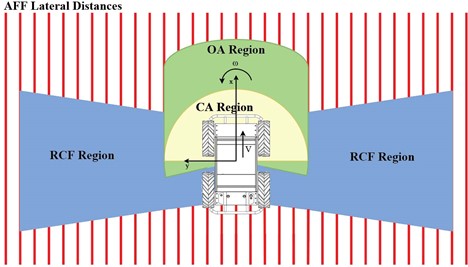

What’s particularly intriguing about the model is that it allows for the sensor’s modular placement, which means it can be placed anywhere on the vehicle. Based on this model, the team defined regions around the mobile robot that, if an object is detected within, will activate specific motion behaviour. These motion laws are used in a layered architecture so that priorities can be assigned. When a potential collision is detected, for example, this layer takes precedence over the general steering motion. The team is currently employing three major layers: collision avoidance, object avoidance, and corridor following. Many more can, however, be added in the future.

“The ample space inside Husky UGV allowed us to place all our networking and sensor synchronization equipment inside, out of harm’s way. The top plate made it simple for us to create a setup in which we could place our sensors in a variety of configurations. Furthermore, the robot’s rugged design allows us to move to rough outdoor environments in the future.”

– Wouter Jansen (Ph.D. Researcher)

Navigation zones around vehicle for behaviour activation based on acoustic model

Husky UGV Aids Testing Efforts

They validated this system in simulation first, then moved on to the Husky UGV with the real-time implementation of the navigation algorithm. The project supports two modes: one in which the operator can still provide manual input velocities, but they are modulated with the algorithm output to navigate safely, and one in which the project is fully autonomous. They used the pre-installed ROS middleware on the Husky UGV to provide the vehicle with the final desired velocities. They then put six different sensor configurations with up to three 3D sonar sensors to the test. During their experiments, the team used a LiDAR sensor to generate a map of the environment for validation purposes. The controller ensured that the vehicle avoided collisions in all scenarios. It also demonstrated stable paths with minor differences in sensor configurations.

(Left) Heat map of the trajectory distribution of the simulation scenario

(Right) Real experiment results for a mobile platform navigating three different scenarios as shown demonstrating stable and safe behavior

Cosys-Lab was able to quickly iterate on testing multiple sonar placement configurations thanks to Husky UGV. Furthermore, once their navigation algorithm had been developed and tested in simulation, it was very simple and user-friendly to connect it to the internal systems of Husky UGV using the pre-installed ROS middleware. The current work would not have been possible without Husky UGV because the team’s previous mobile robots were either too small to allow multiple sensors to be used in various placement configurations or had limited computing resources. Furthermore, due to their cumbersome software systems with limited documentation, it would have taken more time to implement their software on the older platforms.

Instead, using Husky UGV enabled the team to modularly place the sensors and quickly iterate between different sensor placement configurations. Furthermore, the ROS middleware enabled them to set up their navigation controller in a matter of hours rather than having to handle the low-level communication with the vehicle internals themselves. “The ample space inside Husky UGV allowed us to place all our networking and sensor synchronization equipment inside, out of harm’s way,” said Ph.D. researcher Wouter Jansen. “The top plate made it simple for us to create a setup in which we could place our sensors in a variety of configurations. Furthermore, the robot’s rugged design allows us to move to rough outdoor environments in the future.”

So far, the project has been a success, with the sonar sensors being able to navigate indoor environments safely and with stable trajectories while supporting modular placement. To actuate different motion behaviours, the navigation controller employs sensor fusion and manually designed zones around the mobile robot. The team hopes to move the project to other environments, both indoors and outdoors, and experiment with different (rough) conditions in the future. The team’s work was published in Proceedings of the 2021 International Conference on Indoor Positioning and Indoor Navigation (IPIN) and can be read in full here.

The Cosys-Lab team members that worked on this project are Wouter Jansen (PhD Researcher), Dennis Laurijssen (Postdoctoral Research Engineer), and Jan Steckel (Professor).