Chasing Higher Productivity

With the development of automation and robotics, the modernizing world has reached unprecedented levels of productivity. While this is best seen in industries like manufacturing, others, like construction, have struggled to see the same dramatic improvement. A team of researchers from the Construction Automation and Robotics Lab (CARL) at North Carolina State University, in collaboration with the Active Robotic Sensing (ARoS) Laboratory and the Laboratory for Interpretable Visual Modeling, Computing and Learning (iVMCL), believes that the lack of advanced automation in construction is to blame. They have also identified that one of the largest influences on the success of a construction project is effective construction progress monitoring. While automating such a process has been attempted, currently, it is much more common that the monitoring is performed by site managers through on-site data collection and analysis, which is time-consuming and prone to errors. If automated, the time spent on data collection can be better spent by the project management team responding to any progress deviations by making timely and effective decisions.

Blueprints for Change

The solution? An integrated robotic system. The CARL team (Khashayar Asadi, Hariharan Ramshankar, Harish Pullagurla, Aishwarya Bhandare, Suraj Shanbhag, Pooja Mehta, Spondon Kundu, Kevin Han, Edgar Lobaton, and Tianfu Wu) specializes in conducting state-of-the-art research to develop new technology for the solution of emerging problems and to support programs of graduate education. Therefore, they felt confident in proposing their system of a vision-based integrated mobile robotic system for real-time applications in construction.

The proposed robot, built from a Husky UGV platform as a base, would be capable of autonomous navigation and environment detection (including obstacles and pathways). This would grant it significant potential in various construction applications, such as site surveying, progress and safety monitoring, and structural health monitoring. Their most recent work represents the first effort in construction to develop an automated data collection system using an unmanned ground vehicle (UGV).

The proposed robot, built from a Husky UGV platform as a base, would be capable of autonomous navigation and environment detection (including obstacles and pathways). This would grant it significant potential in various construction applications, such as site surveying, progress and safety monitoring, and structural health monitoring. Their most recent work represents the first effort in construction to develop an automated data collection system using an unmanned ground vehicle (UGV).

The goal of the project was therefore to create an autonomous robotic system capable of moving through construction sites and recording a video of the environment. That video would then be processed using various advanced machine learning and computer vision algorithms to make the robot contextually aware of its environment via environmental mapping and detecting obstacles and pathways in real-time.

Inside CARL’s Toolbox

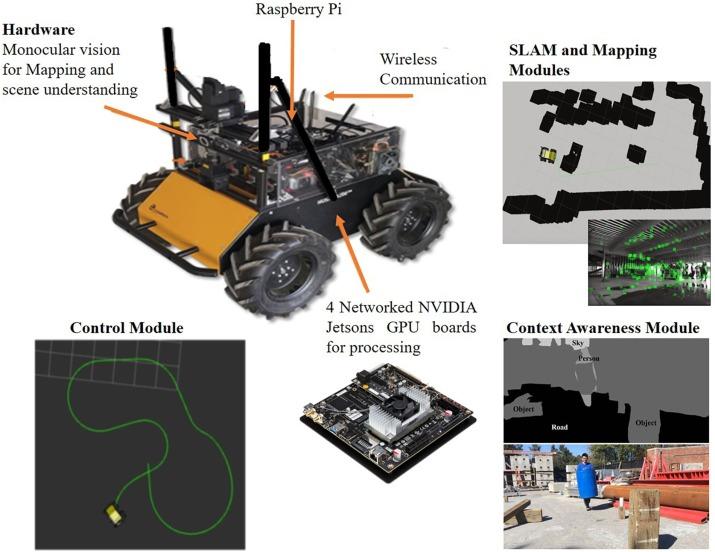

Alongside using Husky UGV as a base, CARL customized their solution by incorporating a stack of NVIDIA Jetson TX1 boards, a Raspberry Pi, a router and a monocular Logitech web camera. The Jetson boards are low-power embedded devices with integrated Graphical Processing Units (GPUs). These are used to process the localization, mapping, motion planning, and context awareness (via semantic image segmentation) tasks in the proposed system (See Figure 1). To control the temperature for the computers, a custom fan was attached. To continue, the monocular camera captures a live video feed of the surroundings. A custom Convolutional Neural Network (CNN) then segments the images in real-time and extracts useful information about the environment. The camera feed is also used to generate a global map of the environment in real-time using SLAM. By fusing the global map and the detections from the neural network, the system can ultimately determine navigable regions in its surroundings.

Figure 1. Overview of system components-hardware and different modules, context awareness, control, SLAM, and mapping.

The software of the robotic system consisted of a custom computing stack with OpenCV, ROS, Eigen, and modified ORB-SLAM for the vision pipeline, while a Torch Lua framework was used for the Deep Learning scene segmentation stage.

Their created system is unique as it offers significant advantages over similar systems used in other industries. These are as follows:

- The system presents the first use of a monocular camera (camera with one lens such as normal webcams) for generating navigable maps of the environment for autonomous navigation.

- This is also the first system that integrates multiple modules in real-time for scene understanding, localization (locating the position of the robot), and mapping on a single modular framework.

Usually, such algorithms are computationally heavy and have been tested before on computers with high computing capabilities, which are significantly expensive. However, in the proposed system, CARL implemented the algorithms in real-time on much smaller and inexpensive processors (NVIDIA Jetson TX1s) that can easily be mounted on the ground robot. This enabled the team to save computational effort and cost, making their system practical and economical over existing systems.

Streamlining Development

“The Clearpath Husky is a great robotic platform to work with. We chose the Clearpath platform because of its payload capacity, easy to use APIs, and well-maintained software wrappers for ROS.”

However, the project was not without its challenges. Integrating many subsystems together in an autonomous system is tricky. The underlying hardware platform has to be robust and capable enough to handle unforeseen circumstances. As well, the software should be fault-tolerant and designed to work in diverse scenarios. Further constraints on the computation time of CARL’s software stack was enforced by the system’s design for real-time operation. Building a simulation environment for the platform is also key. It is far easier and safer to test inside an environment that the developers have complete control over.

CARL believes that the true success of an autonomous system is in the hardware. Only if the behavior is well defined and guaranteed to work, will it be possible to develop robust software. Otherwise, a significant amount of time will be spent trying to account for hardware failures which would detract from the real software goals of speed and accuracy. This is where Husky UGV shined.

“The Clearpath Husky is a great robotic platform to work with”, said CARL team member Khashayar Asadi, “We chose the Clearpath platform because of its payload capacity, easy to use APIs, and well-maintained software wrappers for ROS.”The team also believes that the on-board sensor suite also integrated well with the rest of their stack, with the data being sufficient for their needs. The customizable cage that Husky UGV provides allowed CARL to mount a PTU (Pan and Tilt Unit) for the camera, which was crucial in aiding their auto-calibration process. The high payload (max of 75 kg/165 lbs) of the vehicle was also a boon, allowing the Husky to handle the 4 Jetson TX1s and a custom cooling system with aplomb.

Something that the team had to consider was that construction sites have a lot of debris. However, the rugged tires of Husky UGV provided the needed traction and avoided getting stuck in the mud. The long runtime was also conducive to their operations and testing. Without Husky UGV, CARL would’ve been forced to build a custom platform from scratch. This would’ve involved a lot more cost and effort upfront, especially since the chances of component failure or design issues cropping up later are ever-present dangers. Finally, they would also have had to dedicate time to work on interfacing with the hardware.

What’s Next?

The Construction Automation and Robotics Lab has been extremely active with its research and has published the work consisting of the proposed construction system in the Journal of Automation in Construction, which has the highest rank in their research area. You can read the full report here. The results demonstrate the robustness and feasibility of deploying the proposed system for automated and real-time data collection in the near future.

CARL has also published the following two conference papers: Building an Integrated Mobile Robotic System for Real-Time Applications in Construction in the “35th International Symposium on Automation and Robotics in Construction (ISARC 2018)” and Vision-Based Obstacle Removal System for Autonomous Ground Vehicles Using a Robotic Arm in the “2019 ASCE International Conference on Computing in Civil Engineering (ASCE 2019)”. Finally, they also received the best poster award at the 2019 ASCE International Conference on Computing in Civil Engineering (ASCE 2019) for their poster entitled “Vision-based Obstacle Removal System for Autonomous Ground Vehicles Using a Robotic Arm”. For that project, they also used Husky UGV as the ground robot.

In the coming years, CARL members intend to extend its research on addressing the limitations of its current research studies to make them robust for construction applications. The limitations of the proposed system are described as follows:

- Inaccessibility of some places for the UGV to collect data.

- Inefficient autonomous navigation in cluttered indoor scenes with many objects on the grounds.

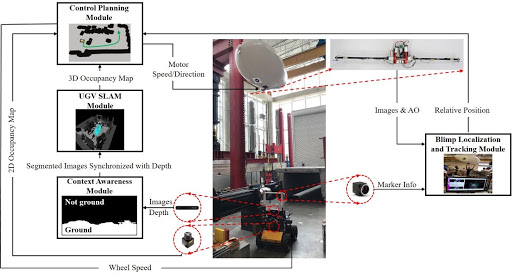

To address the first limitation, the team has proposed an integrated UGV-UAV system for automated data collection in construction (a paper currently under review for the Journal of Automation in Construction). In this project, the given UGV-UAV system periodically visits a set of places of interest, pre-selected by the construction management team. During this mission, the UGV autonomously moves through the site and continuously scans the environment with its sensors. The relative pose between the two vehicles is estimated consistently, which enables the UAV to follow the UGV’s path during its navigation. If the place of interest is not accessible by the UGV due to environmental constraints, the UGV sends the UAV to the desired location to scan the area of interest. The UAV then returns to the UGV, and the heterogeneous team continues towards the next area of interest (see Figure 2).

Figure 2. UAV-UGV integrated robotic system consists of a UGV and a custom-built blimp for automated indoor data collection.

To address the second limitation, CARL is currently developing a mobile UGV, which builds on a Husky UGV platform, equipped with a stereo camera and a KINOVA JACO robotic arm that can remove obstacles along the UGV’s path (see Figure 3). This capability prevents the robot from changing its direction and choosing a longer path during data collection with a predetermined path.

Figure 3. Autonomous obstacle removal and material handling system consists of a UGV with a vision-based sensor and a robotic arm.

To learn more about Husky UGV, click here.

To learn more about the Construction Automation and Robotics Lab, click here.

To learn more about the Active Robotic Sensing (ARoS) Laboratory, click here.

To learn more about the Laboratory for Interpretable Visual Modeling, Computing and Learning (iVMCL), click here.